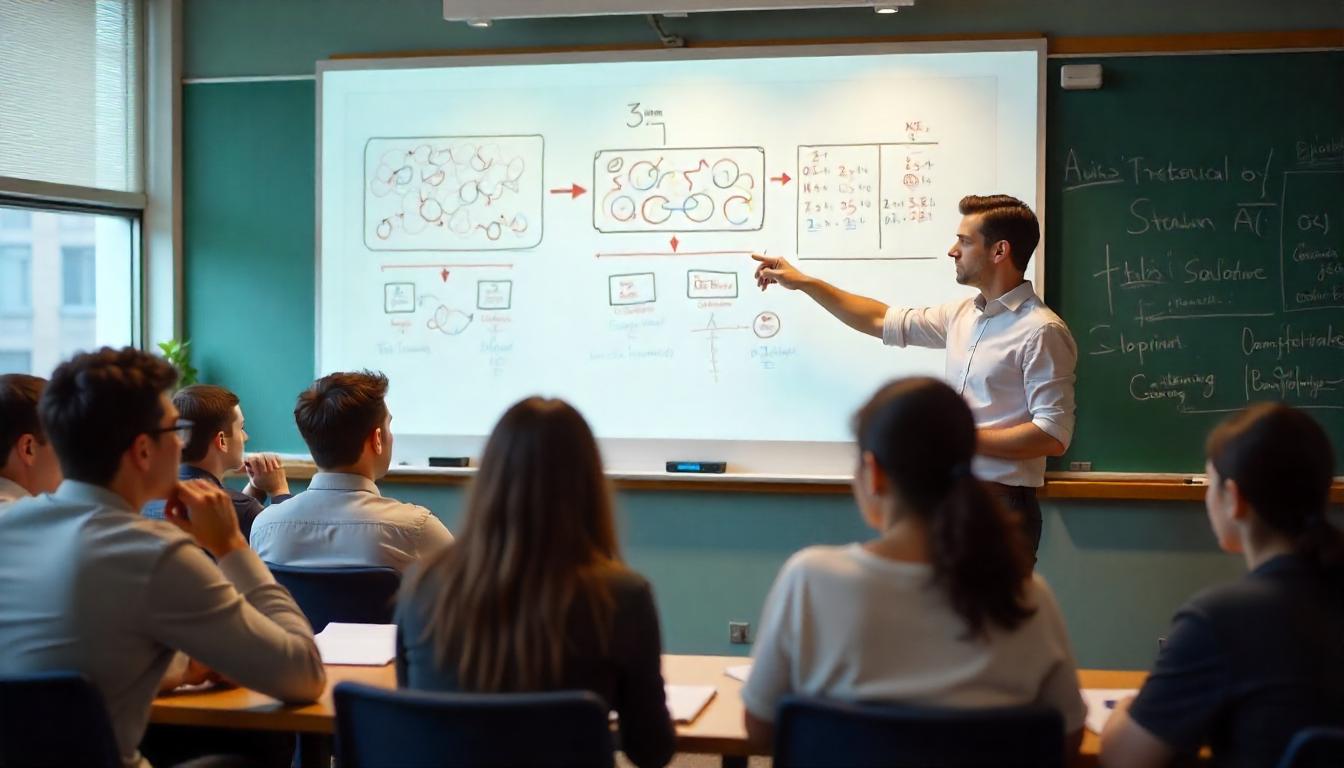

Artificial Intelligence (AI) has made remarkable strides in recent years, powering everything from voice assistants to image recognition. One of the techniques driving this rapid progress is called transfer learning. But what exactly is transfer learning, and how does it work?

In this article, we’ll break down the concept of transfer learning in simple terms and explore why it’s such a game-changer for AI development.

What Is Transfer Learning?

Transfer learning is a method in AI where a model trained on one task is reused or adapted for a different, but related, task. Instead of building and training a new model from scratch, transfer learning leverages the knowledge gained from a previous task to accelerate learning on a new task.

Think of it like this: if you already know how to ride a bicycle, learning to ride a motorcycle is much easier because many of the basic skills transfer over.

Why Is Transfer Learning Important?

Training AI models from the ground up often requires huge amounts of data and computational power. For many organizations, especially smaller ones, this can be costly and time-consuming.

Transfer learning helps overcome these challenges by:

- Reducing Training Time: Models can learn faster when they start from existing knowledge.

- Lowering Data Requirements: You need less labeled data for the new task since the model already understands many features.

- Improving Performance: Leveraging pre-trained models often leads to better results, especially when data is scarce.

How Does Transfer Learning Work?

Here’s a simplified look at the process:

- Pre-training: A model is first trained on a large dataset for a general task. For example, an image recognition model might be trained on millions of images across thousands of categories.

- Fine-tuning: The pre-trained model is then adapted or “fine-tuned” on a smaller dataset specific to a new, related task. For example, fine-tuning the model to recognize specific types of medical images.

- Deployment: The adapted model is used to make predictions or perform tasks in the new domain.

Because the model has already learned to detect useful features like edges, shapes, or textures during pre-training, it can apply this knowledge to the new task more efficiently.

Real-World Examples of Transfer Learning

Transfer learning is behind many cutting-edge AI applications, including:

- Natural Language Processing (NLP): Models like GPT and BERT are pre-trained on massive text corpora and then fine-tuned for tasks like translation, summarization, or chatbots.

- Computer Vision: Pre-trained models help in facial recognition, object detection, and medical image analysis without needing huge amounts of new data.

- Speech Recognition: Transfer learning enables voice assistants to better understand accents, dialects, and different languages by adapting pre-trained models.

Why Should You Care About Transfer Learning?

If you’re an AI developer, researcher, or enthusiast, transfer learning can save you significant time and resources while improving model performance. For businesses, it means AI solutions become more accessible and cost-effective.

Even if you’re not a technical expert, understanding transfer learning offers insight into how AI systems improve and adapt so quickly in our ever-changing world.

Final Thoughts

Transfer learning is a powerful technique that helps AI models learn new tasks faster and more efficiently by building on previously acquired knowledge. It’s one of the key reasons AI is advancing at such a rapid pace and becoming more practical across industries.

As AI continues to evolve, transfer learning will play an essential role in making intelligent systems smarter, faster, and more adaptable.